Maximize Efficiency with Web Scraping & Automation Tools in 2025

There’s a unique thrill in watching a bot perform tasks that would ordinarily take a person hours. With just a few lines of code, web scraping and automation tools can extract clean data from chaotic websites, transforming noise into structure. These tools are no longer niche; they are the invisible engines driving research, startups, and competitive small businesses. The internet holds vast information, but it’s scattered and challenging to collect manually.

These tools simplify the process, cutting through the noise and eliminating repetitive work. By 2025, they won’t just be useful—they’ll be critical to modern digital workflows, powering more intelligent, faster ways of working.

Understanding the Essentials of Web Scraping

Web scraping involves extracting data from websites and converting it into usable formats like CSV and JSON, or directly into databases. The process involves sending a request to a webpage, fetching the HTML content, and parsing that content to extract the desired data. While it sounds straightforward, websites are rarely constructed the same way, and many actively attempt to block scraping bots. Tools play a crucial role by streamlining the complex parts and making scraping more accessible.

Modern web scraping tools often come with built-in features for navigating JavaScript-driven sites, handling pagination, and evading anti-bot measures. Tools like BeautifulSoup, Scrapy, and Playwright offer programmers complete control. They are efficient and can be customized for tasks ranging from grabbing product listings in online stores to compiling government reports.

On the no-code side, tools like Octoparse and ParseHub allow users to create scraping workflows using drag-and-drop interfaces. They are perfect for non- technical individuals who need access to large datasets. Whether it’s real estate deals, scholarly papers, or social media posts, these tools enable users to extract insights without writing a single line of code.

Despite their usefulness, it’s not always legal or ethical to collect data from every site. Responsible scraping means reading terms of service, respecting robots.txt rules, and being transparent about data usage. In a world increasingly focused on digital rights, ethical scraping is becoming just as important as efficient scraping.

Automation Tools: Moving Beyond Scraping

Web scraping is just one part of the equation. Automation tools extend the capabilities of what can be accomplished once data is collected—or even without scraping at all. These tools handle routine, repetitive, and time- consuming tasks. Consider form submissions, account creations, reporting dashboards, or bulk file conversions. Automating these tasks frees up time, reduces errors, and scales operations.

Selenium, for example, can simulate user interactions in a browser. It’s not just scraping—it’s clicking buttons, filling out forms, downloading files, and more. While often used in testing environments, it can also be a powerful web automation engine. Puppeteer offers similar functionality but is optimized for headless Chrome, providing even greater precision and speed.

Platforms like Zapier, Make (formerly Integromat), and n8n are revolutionizing workflow automation. They allow users to connect apps and services in automated flows. For example, when a new post is published on a job board, a scraper can pull the info, Zapier can send it to a Google Sheet, and an email alert can be triggered—all seamlessly. These tools excel in API integration, making them highly customizable and scalable for personal or team workflows.

The integration of AI with automation is another growing trend. ChatGPT-style bots are now being incorporated into scraping and automation flows to interpret data, write summaries, or make decisions. For instance, a scraped review can be analyzed for sentiment, categorized, and reported in real time—all without human intervention. This blend of automation and intelligence is transforming task delegation.

Practical Use Cases in the Real World

The application of web scraping and automation tools isn’t just theoretical; it’s providing real value across diverse industries. In e-commerce, companies use these tools to monitor competitor pricing, stock changes, and product availability. A simple nightly script can scan hundreds of stores, detect shifts, and automatically update product listings, helping businesses remain agile and competitive.

Marketing teams leverage scraping and automation to build targeted outreach systems. Contact information from directories or social platforms is collected and fed into CRMs. Automated tools then send personalized emails, update lead statuses, and schedule follow-ups, streamlining the entire funnel.

Researchers and journalists rely on scraping to collect and monitor data that would be impossible to gather manually. Whether it’s government statistics or academic journals, automation scales the process. In healthcare, scraping is used to stay updated on health advisories, while automation supports everything from appointment reminders to backend billing.

On a personal level, these tools assist individuals in tracking flight deals, monitoring rental prices, and managing schedules. With minimal setup, scraping and automation work quietly in the background, making daily life more organized, efficient, and informed.

The Future of Scraping and Automation Tools

The future of web scraping and automation tools is promising, with increasing power and accessibility. As low-code and no-code platforms become mainstream, even non-technical users can build automated workflows and extract structured data with ease.

AI is also reshaping these tools’ capabilities. From adapting to website changes automatically to analyzing data and making decisions, scraping and automation tools are evolving to think rather than just follow rules. At the same time, evolving data privacy regulations and site defenses are pushing tools to become more ethical and adaptive.

In a world driven by digital processes, these tools are becoming indispensable—not just for developers but for anyone aiming to stay productive and ahead of the curve.

Conclusion

Web scraping and automation tools have become vital for managing data and streamlining tasks in today’s digital landscape. From collecting information to automating workflows, they save time, reduce errors, and enhance productivity. As these tools continue to evolve with AI integration and no- code platforms, they offer even more power to everyday users. Whether for business or personal use, embracing these tools is about working smarter in a world rich with digital opportunities.

Related Articles

Which is Better for Your Business: ConvertKit vs. ActiveCampaign

Your Guide to Facebook Automation: Save Time & Boost Engagement

Discover the 9 Best Tools for Modern Web Design in 2025

How to Choose the Best Automation Software for Your Business: A Guide

SkedPal vs. Motion: A Detailed Comparison to Find Your Perfect Scheduling App

19 Zoom Tips and Tricks for Better Video Meetings: Master Virtual Communication

7 Best M4A to WAV Converters for High-Quality Audio Transformation

Top Keyword Research Tools

20+ Best Digital Marketing Tools

Why You’ll Need a New App to Use Gemini on Your iPhone

Top 9 Apps to Identify Anything Through Your Phone's Camera

Transform Your HR Department with These 6 Automation Strategies

Popular Articles

Find Your Ideal Photo Editing Software: 7 Lightroom Alternatives to Consider

How to Use Free Tools Online to Convert EPUB Files to PDF Format

11 Best Free Screen Recorders Without Watermark

Upgrade Your Email Experience: The 7 Best Email Clients for Windows

The 9 Best AI Recruiting Tools

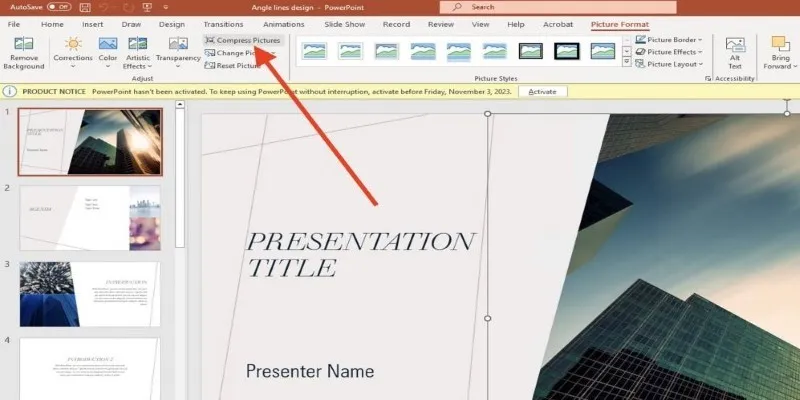

PowerPoint Image Compression: Reduce File Size Without Sacrificing Clarity

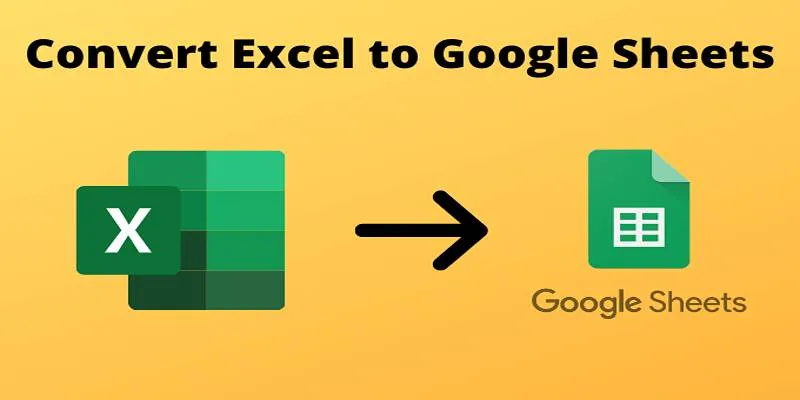

Cloud-Based Spreadsheet Tips: Convert Excel to Google Sheets

Step-by-Step Guide to Creating Canva Slideshows with Audio

Top Free Online Tools to Convert WEBP Images to JPG Format Easily

Bootable USB Creation Made Easy: 3 Tools You Should Be Using Now

KineMaster Without Limits: Removing the Logo the Right Way

mww2

mww2